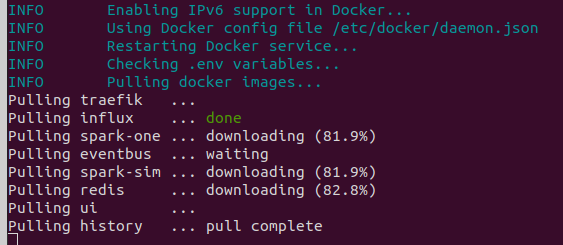

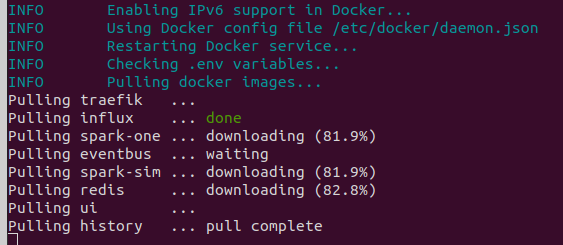

I just tried running an update (last updated in February, so it looks like I’m ~2 updates behind). and the docker image pulls are taking forever. It’s already been more than an hour and only influx is done and history is at pull complete. spark-one and spark-sim have been downloading, extracting and re-downloading pretty much the entire time. Sometimes the percentage jumps around. My router shows that the pi is downloading at 800 Kbps, so I don’t think it’s a internet speed problem. So these just really big images or is something going on?

Edit: of course 2 minutes after posting this they all suddenly sped up and finished. Still the pull was probably 1 hour and 15 minutes or so. Is that normal?

Docker images tend to be big, but not that big. I’ll make a note to go and re-check, to see if some bloat snuck in somewhere.

If all commands and terminal responsiveness in general slow down, then this may also be a sign of an SD card that’s reaching the end of its lifespan.

You can use the agnostics tool to check disk speed:

sh /usr/share/agnostics/sdtest.sh

Percentages jumping around is pretty normal: it downloads image layers in parallel, and the overall progress indicator seems to jump between % for each layer download.

I’m wondering if the IPv6 fix may be to blame. I plugged it in via ethernet cable during the update to see if that would make the download go faster. It didn’t, but later that evening (after the update completely successfully and I had used the web ui) I unplugged the ethernet cable. Since then, I can’t seem to get the Pi to reconnect to my router on wifi, My router (google wifi) shows the device, but it is not assigned an IP address (and it’s not broadcasting the usual mdns raspberrypi.local either). I’m going to try seeing if I can make the router forget the device in case an old dhcp lease is interferring with it, and then I can try plugging the ethernet cable back in so I can at least ssh in, otherwise I’ll need to dig up a monitor/keyboard.

Very unlikely, but technically possible.

The previous fix edited /etc/sysctl.conf. You can double check whether it failed to clean up: the default file on the Pi only contains commented lines (start with #).

Another possibility is that your router got confused by your Pi switching from IPv4 to IPv4 + IPv6, although I don’t think I’ve ever seen that before.

The easiest way to reset the router-side DHCP lease may be to give the device a fixed lease address.

Does it show up when you run a network scanner like Fing?

Hmm. I came back from dinner and used nmap which I think has similar functionality to fing.

$ nmap -sn 192.168.86.0/24

Starting Nmap 7.80 ( https://nmap.org ) at 2021-06-06 21:03 EDT

Nmap scan report for _gateway (192.168.86.1)

Host is up (0.042s latency).

Nmap scan report for raspberrypi.lan (192.168.86.31)

Host is up (0.0032s latency).

Nmap scan report for 3e0021000551353432383931.lan (192.168.86.32)

Host is up (0.068s latency).

...

Nmap scan report for raspberrypi.lan (192.168.86.102)

Host is up (0.0036s latency).

Nmap done: 256 IP addresses (10 hosts up) scanned in 3.24 seconds

It sees both the ethernet (.102) and the wifi (.31) connections to the pi. I checked and can access the brewblox ui through both. I then went back to the Google Wifi app and saw that it was now listing both the ethernet and wifi connections as well, whereas before it wasn’t listing the wifi connection correctly. I’m not sure whether the issue just resolved itself while I was at dinner, or if perhaps the nmap scan somehow fixed things.

The mDNS is still a little funky. It doesn’t show up using the scanning tool I normally use:

$ avahi-browse -at

+ wlp4s0 IPv6 Philips hue - 46336D _hap._tcp local

+ wlp4s0 IPv4 Philips hue - 46336D _hap._tcp local

+ wlp4s0 IPv6 Philips Hue - 46336D _hue._tcp local

+ wlp4s0 IPv4 Philips Hue - 46336D _hue._tcp local

+ wlp4s0 IPv4 Google-Home-da2a8be8a9a411647470947d0d053316 _googlecast._tcp local

Despite this, I can access the brewblox ui through the browser at raspberrypi.local but many of the blocks load data much more slowly (if at all) compared to the same brewblox UI open in other tabs using the ethernet or wifi IP address. Previously I had primarily used the mDNS address to access brewblox and hadn’t noticed any issues like this.

I don’t see any obvious issues with the mDNS daemon on the pi.

$ systemctl status avahi-daemon.service

● avahi-daemon.service - Avahi mDNS/DNS-SD Stack

Loaded: loaded (/lib/systemd/system/avahi-daemon.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2021-06-06 18:07:40 BST; 8h ago

Main PID: 376 (avahi-daemon)

Status: "avahi-daemon 0.7 starting up."

Tasks: 2 (limit: 2062)

CGroup: /system.slice/avahi-daemon.service

├─376 avahi-daemon: running [raspberrypi.local]

└─408 avahi-daemon: chroot helper

Jun 06 18:24:47 raspberrypi avahi-daemon[376]: Interface eth0.IPv6 no longer relevant for mDNS.

Jun 06 18:24:47 raspberrypi avahi-daemon[376]: Withdrawing address record for 169.254.241.61 on eth0.

Jun 06 18:24:47 raspberrypi avahi-daemon[376]: Leaving mDNS multicast group on interface eth0.IPv4 with address 169.254.241.61.

Jun 06 18:24:47 raspberrypi avahi-daemon[376]: Interface eth0.IPv4 no longer relevant for mDNS.

Jun 06 18:24:57 raspberrypi avahi-daemon[376]: Joining mDNS multicast group on interface eth0.IPv6 with address fe80::c14e:dcb1:3

Jun 06 18:24:57 raspberrypi avahi-daemon[376]: New relevant interface eth0.IPv6 for mDNS.

Jun 06 18:24:57 raspberrypi avahi-daemon[376]: Registering new address record for fe80::c14e:dcb1:35bd:522 on eth0.*.

Jun 06 18:25:02 raspberrypi avahi-daemon[376]: Joining mDNS multicast group on interface eth0.IPv4 with address 192.168.86.102.

Jun 06 18:25:02 raspberrypi avahi-daemon[376]: New relevant interface eth0.IPv4 for mDNS.

Jun 06 18:25:02 raspberrypi avahi-daemon[376]: Registering new address record for 192.168.86.102 on eth0.IPv4.

I’m guessing that reinstalling avahi-daemon, or just reflashing the whole installation would probably fix the issue. That said as long as I can access brewblox via the ip addresses I will probably just use that.

This is because avahi-browse shows published mDNS services, not hosts. *.local host addresses are resolved when queried, and won’t show up in browsed results.

The Spark is discoverable because it does publish a service (avahi-browse -t _brewblox._tcp to check)

I would suspect transport problems before DNS issues. Especially with local caching, DNS resolving is a y/n thing - it is unlikely that the first request works fine, and subsequent requests are resolved slowly.

If you open the network tab of your browser’s dev tools, reload the page, and then inspect a request, it should include the resolved Remote Address field.

Is this ethernet/wifi, IPv4, IPv6? If you use this IP address directly, do blocks still load slowly?

If IPv6 is problematic, you can use the avahi-daemon config to disable IPv6 advertisement.