Is there a way to put comment lines in a sequence block? Objective is to document the opcodes in the sequence.

Not at the moment. This is mostly due to storage space. We can encode instructions as 1-4 numbers, but text can’t be compressed as well.

Storage is mostly a limiting factor for Spark 2/3 models (2kB), and less so for the Spark 4 (1 MB). We may implement comments anyway with a disclaimer that we strongly advise not using them on a Spark 2/3.

That would be nice… i only have spark 4 controllers.

PS I find the solution for creating layouts and also for creating dashboards very interesting. Using the grid to install predefined widgets is very interesting. In my professional live i could use something similar. Are you using a specific library of framework to do this? If yes, which one? Thks

Dashboards and Layouts both involve moderate amounts of inhouse code. Neither use off-the-shelf solutions for grid-based display of widgets.

We use https://d3js.org/ for rendering / events in the Builder, and CSS grid for dashboards. In both cases we implemented our own drag and drop handling.

After working with both, we favor SVG for interactive grids, and CSS Grid for automatically generated grid views you can’t drag.

If you want I can give you a short walkthrough of either.

Update: we decided to support comments. Big warnings in docs about their use on Spark <4 will have to suffice.

This feature will not be included in the next release (due tomorrow or early next week), but the one after.

Having comments in the sequence blocks is excellent news! That will help overtime why some parameters are set or not set.

Regarding your walkthrough offer, i spoke about it to the team and they’re very interested in listening to you and learn about the do’s and dont’s. Would it be possible to setup a teams call? on a Tuesday or Thursday? Are you based in the Netherlands? We’re all based in Belgium.

I will need to check whether Teams works on my linux machine, but tuesdays and thursdays are fine. We’re indeed based in the Netherlands.

Are you primarily interested in dashboards (CSS grid), layouts (SVG), or the more broadly applicable things we figured out while experimenting with grid UX/UI?

hi bob, my apoligies for the late reaction. We’re facing some changes in the company/team; must wait until nex dynamics are in place … ![]()

nevertheless, in meantime i continue to build my installations and i’m in a testing stage for my ball valves.

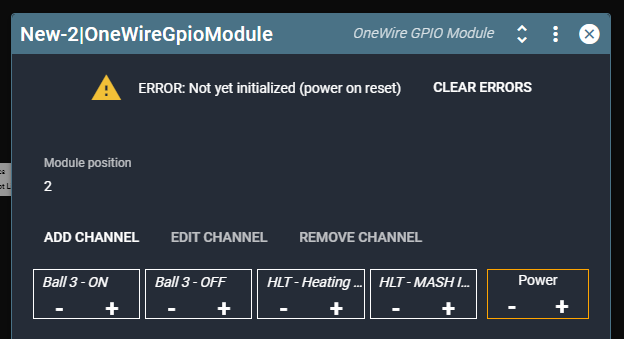

i’m wondering why i receive the following error on my GPIO modules… even after restarting the controller. I’m powering with an external power supply on 24VDC

Inrush current could be an issue, even though I’d then expect a different error. You can enable slow start in the digital actuator to mitigate this. Slow start will use fast pwm to ramp up power 0-100 over the given time.

If that doesn’t solve the issue, you’ll need @Elco for a more expert opinion.

Good luck with the org shuffling =)

This error indicates the Io driver has reset, most likely due to a power dip. When does this occur?

How do you provide power to the spark and the gpio module?

Is the gpio module set to use external power? I assume you have 24V ball valves?

I have 4 GPIO modules on one spark4 controller. The error happens when i’m rebooting the controller or just restart the service. I have an external power supply on 24VDC. The ball valves are indeed 24VDC types but at present they’are not even connected. I’m testing the setup with a voltmeter, just to see if the outputs on the GPIO are switching.

So basically i’m just starting up the controller without any load connected.

the power supply (only one GPIO module is connected to the power supply → should i connect them all individually to the power supply?)

view on the controller and the GPIO modules

the controller and the GPIO modules are mounted on a DIN rail, that fixing is not very stable.

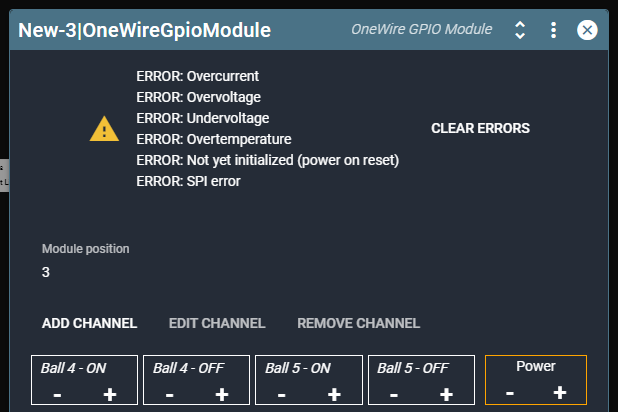

In meantime i got some other errors as well

to be complete… the GPIO1 module only gives an overvoltage error… after clearing the error the module is working properly. The GPIO1 module is also the one that receives direct power.

it are the other modules #2, #3 and #4 that ar showing all kind of errors; those errors cannot be cleared.

If you get all errors at once, thats usually when there is no communication at all with the module.

Connecting power to 1 module is enough.

Check that all headers are inserted far enough.

The second one looks barely inserted.

i pressed all GPIO modules and that seems to bring a positive result; nevertheless the GPIO modules are not really straing mounted on the DIN rail. I know you were working on a new way of fixing modules on DIN Rails, is the new solution already available. I fear as it now that i will encounter bad contacts.

Anyway, is it possible that only one output (in my case pin 5 and 6 on GPIO#3) are not working? Short circuited?

What is the best way to replace a GPIO module? Do i have to connect all blcoks first to a mockup pin block and then replace the GPIO module or can i simply exchange the broken one with the new and will everything be working fine? Does the spark4 controllers recognises individual boards or only the order they have?

reason for asking is that i have 4 GPIO modules and my GPIO#3 module has a broken output.

GPIO modules are identified by their position. You can exchange the hardware without making any software changes to the channel configuration.

Update on comments: we decided to push the current release before ongoing firmware work was ready for release.

Comments are still in the pipeline, and will be bundled with the Digital Input changes.

hi bob, thanks for the update

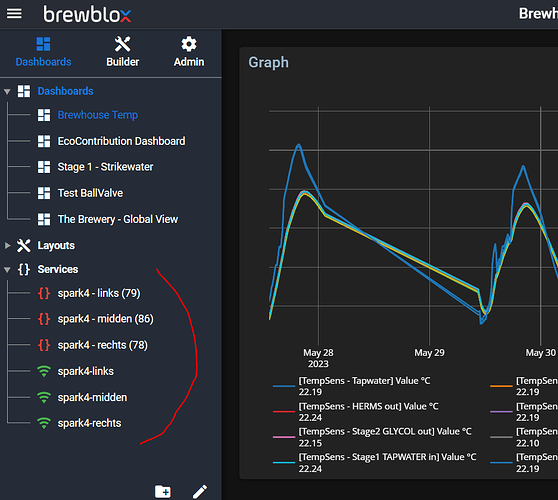

meanwhile, i have been updaten the controllers several times, rebooting the controllers, rebooting the services, restarting the raspberry pi and now suddenly after rebooting the raspberry pi i noticed that the controllers are recognised as different services making that all my links with my layout and dashboards are broken! How could this happen? What did i do wrong? How to avoid this?

Thanks for your feedback

Spark services are identified by the service key and --name argument in docker-compose.yml.

If your services suddenly changed ID, it may be because the --name argument was changed.

See Brewblox services | Brewblox for documentation

I went through the documentation earlier and noticed that I forgot to change some information, nevertheless, I note that I included the name argument twice and that despite the name of the service not matching the name argument, the latter was adopted by the software.

I suppose i have to align the header of the service with one the name argument, so eg renaming ctl-78 with “spark4-rechts”, could you pleas confirm?

What happens if i change the service name in the UI? Does this also changes the settings in the yaml file?

"

ctl-78:

command: --name=ctl-78 --discovery=all --device-id=10521C86BB18 --name=spark4-rechts --device-host=192.168.2.185

image: brewblox/brewblox-devcon-spark:${BREWBLOX_RELEASE}

privileged: true

restart: unless-stopped

volumes:

- type: bind

source: /etc/localtime

target: /etc/localtime

read_only: true

- type: bind

source: ./spark/backup

target: /app/backup

ctl-79:

command: --name=ctl-79 --discovery=all --device-id=C4DD5766BE54 --name=spark4-links

image: brewblox/brewblox-devcon-spark:${BREWBLOX_RELEASE}

privileged: true

restart: unless-stopped

volumes:

- type: bind

source: /etc/localtime

target: /etc/localtime

read_only: true

- type: bind

source: ./spark/backup

target: /app/backup

ctl-86:

command: --name=ctl-86 --discovery=all --device-id=C4DD5766BD9C --name=spark4-midden

image: brewblox/brewblox-devcon-spark:${BREWBLOX_RELEASE}

privileged: true

restart: unless-stopped

volumes:

- type: bind

source: /etc/localtime

target: /etc/localtime

read_only: true

- type: bind

source: ./spark/backup

target: /app/backup

"