Should I remove the previous docker versions?

I’m not sure what you mean. Are you referring to the prompt to prune previous images during update? you can select “yes” there.

It looks like you still have to flash the firmware (brewblox-ctl flash).

Yes, the sensors are recognized by their unique address, not where you plug them in.

Using the UI I get the following, tried that twice.

Update failed: Request failed with status code 424

Please retry.

If the retry fails, runbrewblox-ctl flashLog messages

Started updating spark-one@/dev/ttyACM0 to version a641578b (2020-09-22 22:47:58 +0200)

Sending update command to controller

Waiting for normal connection to close

Connecting to /dev/ttyACM0

Failed to update firmware: SerialException([Errno 6] could not open port /dev/ttyACM0: [Errno 6] No such device or address: ‘/dev/ttyACM0’)

Scheduling service reboot

So I did $ brewblox-ctl flash

(…)

Your firmware has been updated.

If a new bootloader is required, your Spark will automatically download it over WiFi.

ONLY If your Spark LED keeps blinking blue, run: ‘brewblox-ctl particle -c flash-bootloader’

The light is blinking blue and the screen is blank. so I ran the $ brewblox-ctl particle -c flash-bootloader command.

(…)

Status: Image is up to date for brewblox/firmware-flasher:edge

docker.io/brewblox/firmware-flasher:edge

INFO Stopping services…

Removing network brewblox_default

WARNING: Network brewblox_default not found.

INFO Starting Particle image…

INFO Type ‘exit’ and press enter to exit the shell

Flashing P1 bootloader…

sending file: bootloader-p1.binFlash success!

Flash success!

Done.

I am now waiting to see if the UI loads again. Is there a way to set it to auto update? Maybe some cron job?

$ brewblox-ctl log

INFO Log file: /home/pi/brewblox/brewblox.log

INFO Writing Brewblox .env values…

INFO Writing active containers…

INFO Writing service logs…

INFO Writing docker-compose configuration…

INFO Writing Spark blocks…

HTTPSConnectionPool(host=‘localhost’, port=443): Max retries exceeded with url: /spark-one/blocks/all/read (Caused by NewConnectionError(’<urllib3.connection.VerifiedHTTPSConnection object at 0x75cabd50>: Failed to establish a new connection: [Errno 111] Connection refused’))

INFO Writing dmesg output…

INFO Uploading brewblox.log to termbin.com…

https://termbin.com/g05b

The UI does auto-update, but you do need to restart your services (brewblox-ctl up) after flashing the controller with a brewblox-ctl command.

If it’s unclear whether services are active, you can run docker-compose ps.

Thanks!

pi@raspberrypi:~/brewblox $ brewblox-ctl up

Creating network “brewblox_default” with the default driver

Creating brewblox_redis_1 … done

Creating brewblox_eventbus_1 … done

Creating brewblox_influx_1 … done

Creating brewblox_spark-one_1 … done

Creating brewblox_history_1 … done

Creating brewblox_traefik_1 … done

Creating brewblox_ui_1 … done

pi@raspberrypi:~/brewblox $ docker-compose ps

Name Command State Portsbrewblox_eventbus_1 /docker-entrypoint.sh Up 1883/tcp

/usr …

brewblox_history_1 python3 -m Up 5000/tcp

brewblox_history

brewblox_influx_1 /entrypoint.sh influxd Up 8086/tcp

brewblox_redis_1 docker-entrypoint.sh Up 6379/tcp

–app …

brewblox_spark-one_1 python3 -m Up 5000/tcp

brewblox_devcon …

brewblox_traefik_1 /entrypoint.sh Up 0.0.0.0:443->443/tcp,

–api.dashb … 0.0.0.0:80->80/tcp

brewblox_ui_1 /docker-entrypoint.sh Up 80/tcp

ngin …

Seems that it is all in order now. I created a new log just now but it might be a bit too soon to diagnose anything.

You may want to run brewblox-ctl disable-ipv6 if you haven’t already, but otherwise everything looks connected and ok.

Yes looks like it was already disabled.

Here is the new termbin 1 hour later https://termbin.com/5km6

Do you still have issues with accessing SSH on your Pi?

Connection status / logs look ok, with the exception of voltage reported by your Spark.

It still reports 9V on the 5V power supply.

What is the output of the vcgencmd measure_volts command?

How is your Spark powered? (through the Pi, own adapter, from voltage?)

volt=1.2438V

It is powered by the pi itself.

$ uname -a

Linux raspberrypi 5.4.51-v7+ #1333 SMP Mon Aug 10 16:45:19 BST 2020 armv7l GNU/Linux

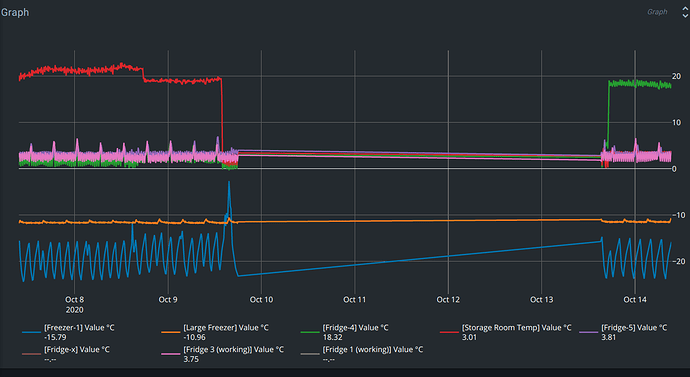

Does the gap start when you started updating your system, or before that?

Edit: we also discussed the strange reading on your voltage sensor. The most likely explanation is that it’s a firmware bug. If your Spark otherwise works as expected, then don’t worry about it.

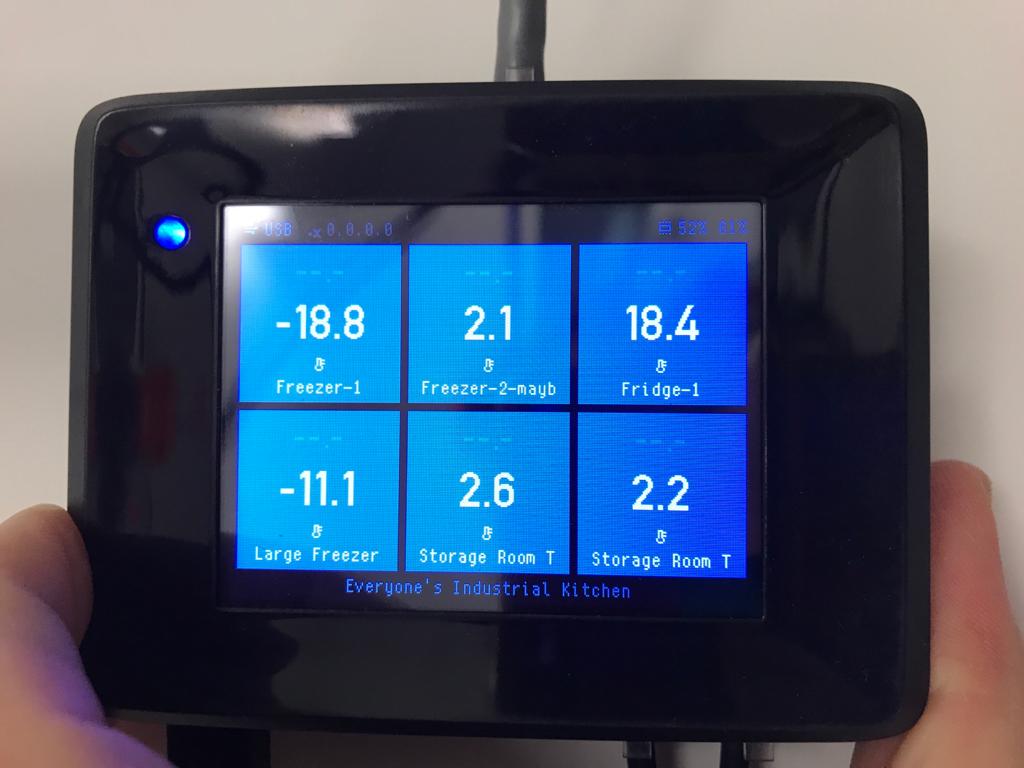

Can you tell me what the memory percentages are on the top right of the display?

Can you export your blocks from the service page of the spark? (top right menu).

Can you dump your eeprom so I can get an exact copy of your spark (brewblox-ctl particle -c eeprom).

The 5V supply voltage being read as 9V puzzles me.

The value is provided by the touch screen chip, which reads its internal supply voltage. With power only coming from USB, it makes no sense that a higher voltage is read. This leaves 2 options:

- A hardware problem, but I cannot think of one yet that would give symptoms like this.

- A software bug, most likely something memory related.

It starts more or less at the same time, not completely sure. I updated on Friday and that is when it stopped recording so it would make sense.

52% and 61%

brewblox-blocks-spark-one.txt (3.3 KB)

JSON file renamed to txt attached.

Uploaded 1 file, 131 072 bytes

wget https://bashupload.com/xxrSD/eeprom.bin

Hope this helps!

You are only using this to monitor a few temperatures.

You are hardly using any memory and the Spark is not doing much at all. That rules out many software bugs.

I think the gap in data is indeed that the system was not brought back up after updating.

After doing the above I lost the interface while still being connected via SSH. I remember $ brewblox-ctl up is is now up again. here is $ brewblox-ctl log https://termbin.com/9kyjh

Yes, for the eeprom dump command we take down the normal services, so you had to start them back up.