Hey guys, hope this isn’t a repeat of an existing post (didn’t find anything when I looked).

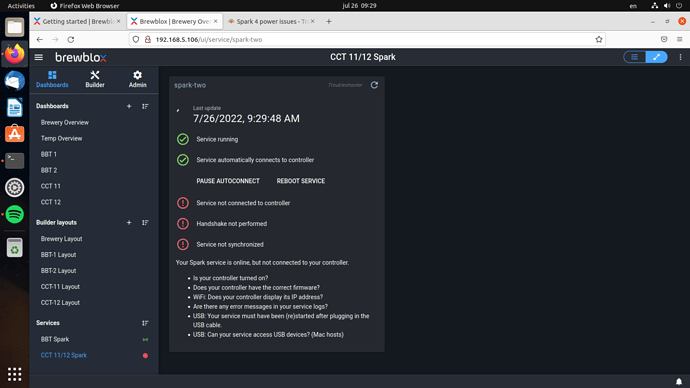

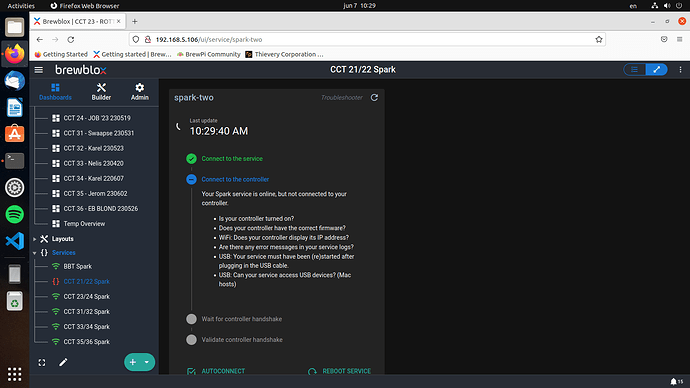

I have been experiencing issues with my two Spark 4s sometimes loosing connection to my controller (Raspberry Pi) and being unable to reconnect. It happens to both of the Sparks intermittently and so far the only fix I have found is to power cycle the Spark itself (not ideal as I am in a commercial brewery). Below you can see what I see in the UI:

And the log file: https://termbin.com/gxbl

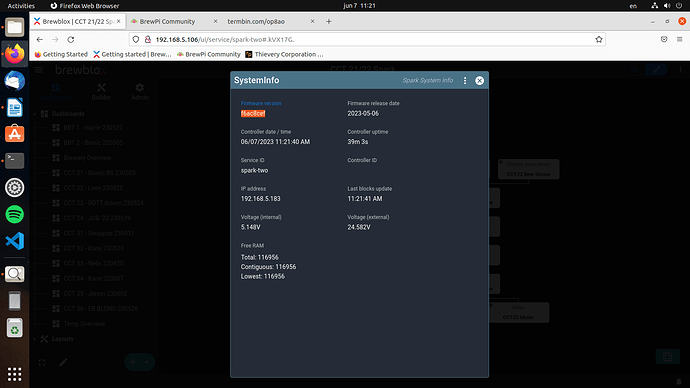

I have noticed that some times there is a statement that I need to update the firmware, but having read another post about that issue I think that the firmware message is more of a coincidence than a cause. Today, for example, there is no message about the firmware.

I can leave this Spark hanging for a bit to see if it comes back online or if someone wants me to try something specific.

One note, I did realize that my Controller may be attached to the “Brewhouse Lights” socket (my fail there) so it is likely that the Controller is offline outside of working hours. Gonna correct this today, but thought I’d add it in the description.

Cheers.