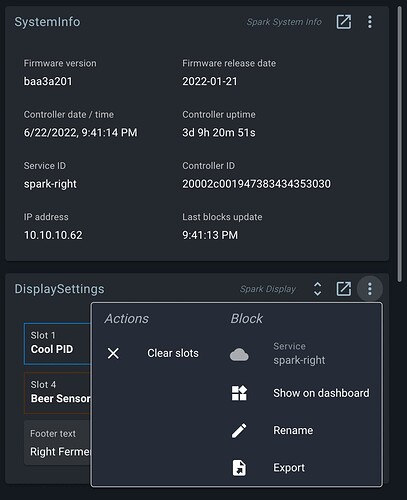

I noticed the Spark 3 display is showing units in C and I would like to change them back to F. If I remember correctly, I thought there was a “Units” setting under the Spark Display Settings block but I am unable to find it. Am I looking in the wrong place? For reference, under Admin > General Settings > Temperature Units, the unit is set to F.

The admin settings control both the UI and the display setting for temp units.

In the admin settings, if you switch to celsius and back, does it then update the on-screen units? If not, could you please run brewblox-ctl log?

It looks like both Sparks had some hangups, probably causing the change to not be applied.

What do the numbers in the top corner of the screens show?

Do you have any wifi repeaters in range, or your router channel mode set to automatic? It’s a known issue that access point or channel switches can cause problems.

Does the display still show *C if you restart the Spark?

Thanks Bob. I installed a wifi repeater near the Spark controllers then flashed the firmware again and it resolved the issue.

Well, I thought it was fixed. However the displays keep reverting back to C. In answer to your previous question, the wireless signal levels displayed in the top right corner is around 70-80%

Could you please run brewblox-ctl log again, so we can check whether the spark still suffers from hangups?

If your router and/or repeater are set to automatic channel mode, you’ll want to pick a fixed channel.

Unless wifi signal is too weak without, best results are typically achieved with a fixed channel, and without a repeater.

Of course. Here is the new log file. https://termbin.com/jxsn. After reading your last post I set the channels to fixed. Started off with channel 2, but moved it to channel 6 prior to my last post. I do not have repeaters on my network, my house and brew shop are hard wired Ethernet/Fiber (I am a Cisco guy yet still hate wireless). Only use Wireless when I must  .

.

It looks like the connection issues were fixed, but it keeps resetting the display setting to degF.

It’s possible the settings are not being persisted correctly.

Could you please run brewblox-ctl particle -c eeprom ? This will upload the binary data of your stored blocks, so we can check what’s going on there.

Done. Do I need a USB device of some sort to extract the files?

edge: Pulling from brewblox/firmware-flasher

7d63c13d9b9b: Pull complete

bb262aff53d8: Pull complete

f4171a46a6b6: Pull complete

f80531447916: Pull complete

54f8ece7c02a: Pull complete

9307f7bb77be: Pull complete

833c7f192043: Pull complete

89606be69899: Pull complete

e5f98c657703: Pull complete

2828e9ed7a52: Pull complete

Digest: sha256:f568daf84c6541f13342754c377e99bd4b3bd376b6ad7e68ef8dcd6347b1904e

Status: Downloaded newer image for brewblox/firmware-flasher:edge

No compatible USB devices found

Ah, that’s an oversight on my part: the command requires the Spark to be connected over USB, as it uses a system-level debugging command.

If this is not feasible, there are other ways to validate or recreate persistent memory that do not require system debug commands.

No problem.  For clarity, do I need to connect the Spark to a Raspberry PI over USB. If so, that is not an option for me as I run Brewblox on a TrueNAS Linux VM.

For clarity, do I need to connect the Spark to a Raspberry PI over USB. If so, that is not an option for me as I run Brewblox on a TrueNAS Linux VM.

It needs to be connected to the device where you run brewblox-ctl, in your case the NAS.

I believe I have resolved the problem. With my system I have VM which is my main installation of Brewblox and I have an RPI used only for Tilt Hydrometers. In short, the RPI sends the Tilt information over to my VM. I decided to update the RPI to the latest version of Brewblox and after noticing that I was still having the issue, I checked the temperature units on the RPI. It was set to celsius. After updating both to fahrenheit I no longer have the issue.

Good to hear it works now, but this does indicate that your Pi also connects to your Spark. If your VM is supposed to control the Spark, the Pi shouldn’t be doing it as well.

To verify, you can check the docker-compose.yml file on your Pi, and run brewblox-ctl remove {name} for all image: brewblox/brewblox-devcon-spark:${BREWBLOX_RELEASE} services you see there.