Could you please run brewblox-ctl log? That should tell us a bit more about what’s happening.

Thanks, you are not running the latest version though. Can you try if updating to latest helps? Probably doesn’t make a difference, but good to try.

After updating, it hasn’t rebooted yet and has broken the reboot trend. This tell me either:

- Something in the update fixed the issue.

- Restarting the containers (from the update) has reset whatever was causing the problem.

I’ll keep an eye out and report back.

The update did contain an updated system layer and a minor change to a timeout in the server side so that it would be less than the watchdog timer on the controller. One theory I had was that a communication error caused a stall on both sides until the watchdog timer on the controller rebooted it.

When the server times out earlier, it sends a new message that breaks the deadlock. The message handlings errors before the watchdog timer runs out.

This is mostly a theory because I don’t know what would cause the communication deadlock, but perhaps it worked.

It’s been 9 days now, no reboots.

Thanks for the update! Could you also please run brewblox-ctl log? It would be useful to check whether any communication errors were reported the last 9 days.

my random rebooting is back… I migrated my BrewBlox setup from y Pi to my Synology to see if that would improve, but it keeps rebooting. Before, it rebooted but I didn’t really notice, except for the 3 beeps when the Sprak booted, but now the Sprak is regularly unresponsive and have to wait for it to reboot.

New logfiles: https://termbin.com/ouku

(I had to set log-drivers due to a different docker config for Synology DSM, that is why some services report an error with the ‘db’ log-driver)

The Spark is also not reachable via ping, only for short bursts at a time:

Request timeout for icmp_seq 24

ping: sendto: Host is down

Request timeout for icmp_seq 25

ping: sendto: Host is down

Request timeout for icmp_seq 26

ping: sendto: Host is down

Request timeout for icmp_seq 27

ping: sendto: Host is down

Request timeout for icmp_seq 28

Request timeout for icmp_seq 29

64 bytes from 10.0.1.127: icmp_seq=30 ttl=255 time=15.397 ms

64 bytes from 10.0.1.127: icmp_seq=31 ttl=255 time=129.175 ms

64 bytes from 10.0.1.127: icmp_seq=32 ttl=255 time=100.081 ms

64 bytes from 10.0.1.127: icmp_seq=33 ttl=255 time=7.805 ms

64 bytes from 10.0.1.127: icmp_seq=34 ttl=255 time=6.204 ms

64 bytes from 10.0.1.127: icmp_seq=35 ttl=255 time=13.440 ms

64 bytes from 10.0.1.127: icmp_seq=36 ttl=255 time=7.715 ms

64 bytes from 10.0.1.127: icmp_seq=37 ttl=255 time=15.891 ms

64 bytes from 10.0.1.127: icmp_seq=38 ttl=255 time=10.907 ms

64 bytes from 10.0.1.127: icmp_seq=39 ttl=255 time=9.000 ms

64 bytes from 10.0.1.127: icmp_seq=40 ttl=255 time=7.260 ms

Request timeout for icmp_seq 41

Request timeout for icmp_seq 42

Request timeout for icmp_seq 43

Request timeout for icmp_seq 44

Request timeout for icmp_seq 45

Request timeout for icmp_seq 46

(The Pi next to the Spark is reachable, so it is not the wifi)

You do seem to be getting a lot of network resets / interrupts. The history service is reporting reconnects every few seconds. This does correlate with how the Spark is behaving: one of the known issues in the Wifi stack is that it will freeze on network reset.

Is your router channel hopping, or possibly contending with a repeater?

The Wifi is very stable, with 3 Unifi AP’s in and around the house. The one in the shed near the Spark however is connected to the home network via a powerline adapter. I never experienced problems connecting to the raspberry pi or iSpindel. Anyway I can test if the powerline is causing the trouble?

If that is the case, I would have to get back to the raspberry pi connecting the Spark via USB

If it’s physically feasible, you could try to connect the Pi/NAS to the powerline adapter over cable.

Open the UI + network tab in browser dev tools, and navigate to a dashboard containing a live graph.

(eg. last 10 minutes)

In the network tab, filter on “values”. You should see an eventsource request called something like values?duration=10m&fields=...

Eventsources for live graphs stay open indefinitely, but do not automatically reconnect. They also do not involve the Spark itself.

Network interrupts will close the eventsource.

If you’re getting those, then the next step would be to try and reduce the number of powerlink / AP hops between your laptop and the Brewblox server until you’ve identified the culprit.

In the meantime, fixing Wifi on the Spark is a high-prio issue, but may take some time. So far we’ve spent entirely too much time debugging the system layer Wifi code. The next step likely involves finding a new Wifi stack.

Seems I have a similar issue. but not exactly the same.

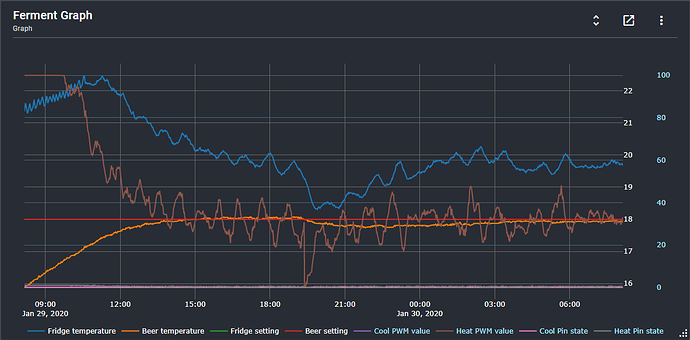

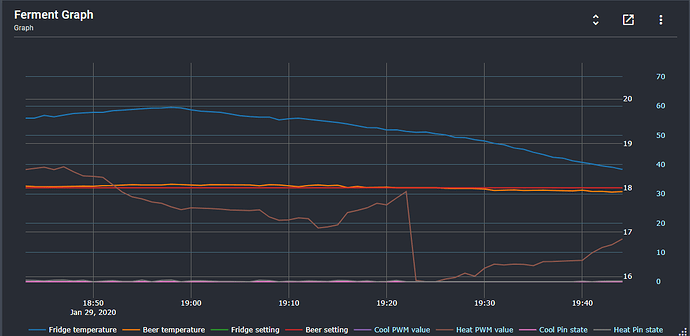

Have set up a Spark and Pi for my Brother and started a test. System holding temp great until on the 29th at 19:22 the Spark rebooted.

Looking through the log https://termbin.com/s1lm I see this:

spark-one_1 | 2020-01-28T10:47:16.615492321Z 2020/01/28 10:47:16 INFO brewblox_service.repeater Broadcaster resumed OK

spark-one_1 | 2020-01-29T19:22:55.196450488Z 2020/01/29 19:22:55 WARNING py.warnings /usr/local/lib/python3.7/site-packages/brewblox_devcon_spark/communication.py:302: UserWarning: Protocol connection error: read failed: device reports readiness to read but returned no data (device disconnected or multiple access on port?)

spark-one_1 | 2020-01-29T19:22:55.198354690Z warnings.warn(f'Protocol connection error: {exc}')

spark-one_1 | 2020-01-29T19:22:55.198442033Z

spark-one_1 | 2020-01-29T19:22:55.198695676Z 2020/01/29 19:22:55 INFO ..._devcon_spark.communication Disconnected <SparkConduit for /dev/ttyACM0>

spark-one_1 | 2020-01-29T19:22:55.198744686Z 2020/01/29 19:22:55 INFO ..._devcon_spark.communication Starting device discovery, type=all

spark-one_1 | 2020-01-29T19:23:06.032133857Z 2020/01/29 19:23:06 ERROR brewblox_service.repeater Broadcaster error during runtime: ```Thanks for your (late night) feedback! I will give it a try, but moving back to the Pi with the Spark connected over USB might be the easier option for now.

Can I do anything to help you debug the wifi problems? Get logs straight from the Spark, share my setup, etc? Happy to be of assistance

Thanks for the offer! So far, symptoms seem well-known, and one of the work items is to introduce rudimentary logging on the Spark.

@rbpalmer That indeed has all the marks of a controller freeze, followed by a watchdog reset. Did the problem reoccur?

I am brewing today and 2 to 3 times an hour, the Spark stops sending updates to Brewblox and after a little while reboots. I created 2 sets of logs so far. The Spark is connected to the Raspberry via USB and my laptop and Pi are on the same hotspot in the shed.

It’s not affecting my brew day, but the random rebooting feels weird. Can I fully disable Wifi now I am connected via USB? Or can that not be the reason

You can disable Wifi (hold down the setup button for 10s to clear wifi credentials).

I’m not sure why your Spark is rebooting: I took a look at your logs earlier, and they don’t offer more info than “something went bad, and then the Spark rebooted”.

We recently fixed some bugs that resulted in restarts, but apparently one or more remain. They may be caused by an error in the network stack, they may not be.

the weird thing is that both reset buttons (the top and bottom one?) seem to eventually reboot de Spark and then it comes back with an IP address

Can I factory reset the Spark and load a backup? See if that helps my reboot troubles? (Couldn’t find it in the documentation)